if s is a complex number and a = Re(s), then abs(ns) = na

Definition 1: complex number

Any value expressible in the form a + bi where a,b are real numbers (see here for definition of real numbers) and i2=-1.

It should be pointed out that cos x + isin x fits the definition where a = cos x and b = sin x.

Definition 2: conjugate of a complex number

The conjugate of a complex number a + bi is the complex number a - bi. The conjugate of a complex number a - bi is the complex number a + bi.

If s is a complex number, its conjugate is denoted as s or as s'.

Definition 3: absolute value for complex numbers

abs(a + bi) = √a2 + b2

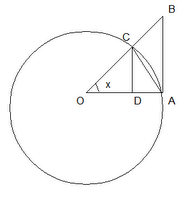

This definition may seem strange at first but it is a natural generalization from the concept of absolute value for real numbers. One of the key functions of an absolute value for real numbers is that it provides the distance between the two real values. For example, the distance between two real numbers x,y is abs(x - y). This is true regardless of whether x is larger or y is larger.

Subtraction is the method for distance on a line. With complex numbers, we are dealing with a plane. In this situation, distance comes from using the Pythagorean Theorem (c2 = a2 + b2) where the distance is between two (x,y) points is two absolute values abs(x1 - x2) and abs(y1 - y2). Then, the distance between the points is the triangulation of those two points which is sqrt(abs(x1 -x2)2 + abs(y1 - y2)2). [See David Joyce's article for more details]

Definition 4: Re(s)

Re(s) is the real portion of a complex number. If s is a complex number = a + bi, then Re(s)=a.

Definition 5: Im(s)

Im(s) is the imaginary portion of a complex number. If s is a complex number = a + bi, then Im(s) = b.

Lemma 1: abs(cos x + isin x) = 1

Proof:

(1) abs(cos x + isin x) = √cos2(x )+ sin2(x)

(2) Now, cos2(x) + sin2(x) = 1. [See here for details]

(3) So abs(cos x + isin x) = √1 = 1.

QED

Theorem 2: The absolute value of complex power

If s is a complex number and n a positive real and a = Re(s), then:

abs(ns) = na

Proof:

(1) There exist real numbers a,b such that s = a + bi [See definition of a complex number above]

(2) ns = na + bi = e(a + bi)ln(n) [See here for review of ln and e]

(3) e(a + bi)ln(n) = e(a)ln(n) + (bi)ln(n) = e(a)ln(n)*e(bi)ln(n) = (na)e(bi)ln(n)

(4) Using Euler's Formula,we have:

e(bi)ln(n) = cos([b]ln(n)) + isin([b]ln(n))

(5) Let's clean it up by letting x = b*ln(n) so that we have:

cos([b]ln(n)) + isin([b]ln(n)) = cos(x) + isin(x).

(6) So putting it all together, we have:

ns = (na)(cos(x) + isin(x))

(7) Since n is a positive real and a is a real, we know that abs(na) = na

So that:

abs(na)(cos(x) + isin(x))) = na*abs(cos(x) + sin(x))

(8) Using Lemma 1 above, we now have:

abs(ns) = na where a = Re(s).

QED

Lemma 3: Triangle Inequality for Complex Numbers

If s,t are complex numbers, abs(s + t) ≤ abs(s) + abs(t)

Proof:

(1) There exists a,b,c,d (see Definition of complex number above) such that:

s = a + bi

t = c + di

(2) abs(s + t) = abs((a+c) + (b+d)i = √(a+c)2 + (b+d)2 [See Definition of absolute value above for complex numbers]

(3) abs(s + t)2 = (a+c)2 + (b + d)2 = [(a + c) + (b + d)i][(a + c) - (b + d)i] = [s + t][s + t]' [See Definition 2 above for details on conjugates of complex numbers]

(4) [s + t]' = (a + c) - (b + d)i = (a - bi) + (c - di) = s' + t'

(5) abs(s + t)2 = [s + t][s' + t'] = ss' + [st' + s't] + tt'

(6) We can also see that:

ss' = (a + bi)(a - bi) = a2 + b2 = abs(s)2

tt' = (c + di)(c - di) = c2 + d2 = abs(t)2

[st' + s't] = (a + bi)(c - di) + (a - bi)(c + di) = ac - adi + bci + bd + ac + adi - bci + bd =

= 2ac + 2bd

s't = (a + bi)(c - di) = ac + bci -adi + bd

(7) So, ss' + [st' + s't] + tt' = abs(s)2 + 2Re(s't) + abs(t)2

(8) 2*abs(s't) ≥ 2Re(s't) since:

(a) abs(s't) = √[(ac + bd) + (bc - ad)i][(ac + bd) - (bc - ad)i] = √[(ac + bd)2 + (bc - ad)2

(b) ac + bd = √(ac + bd)2

(c) Since (bc - ad)2 ≥ 0, it is clear that 2*abs(s't) ≥ 2&Re(s't)

(9) So that we have:

abs(s + t)2 ≤ abs(s)2 + 2*abs(s't) + abs(t)2

(10) abs(s't) = abs(s)*abs(t) since:

abs(s)*abs(t) = √a2 + b2*√c2 + d2 = √a2c2 + a2d2 + b2c2 + b2d2

abs(s't) = √[(ac + bd)2 + (bc - ad)2 = √a2c2 + 2abcd + b2d2 + b2c2 + a2d2 - 2abcd = √a2c2 + a2d2 + b2c2 + b2d2

(11) This gives us:

abs(s + t)2 ≤ abs(s)2 + 2*abs(s)*abs(t)+ abs(t)2 = (abs(s) + abs(t))2

(12) Squaring both sides gives us:

abs(s + t) ≤ abs(s) + abs(t) since abs(s + t), (abs(s) + abs(t)) are both nonnegative.

QED

References

- David Joyce, Dave's Short Course on Complex Numbers

- Alexander Bogomolny, "Useful Inequalities among Complex Numbers", Cut-The-Knot.org